- Home

- Resource

- Explore & Learn

- The Double-Edged Sword of AI in Medical Diagnostics: Benefits, Challenges, and the Path Forward

- Home

- IVD

- By Technology Types

- By Diseases Types

- By Product Types

- Research

- Resource

- Distributors

- Company

Artificial Intelligence (AI) has transformed medical diagnostics by offering unprecedented capabilities to analyze complex datasets and enhance diagnostic precision. AI algorithms, particularly those based on machine learning and deep learning, can process vast amounts of data from various sources, including genetic information, electronic medical records, and medical images. Their ability to detect subtle patterns and generate accurate predictions has significantly improved the efficiency and accuracy of medical diagnostics. However, the integration of AI into healthcare also presents substantial challenges, including ethical dilemmas, legal liabilities, and regulatory hurdles.

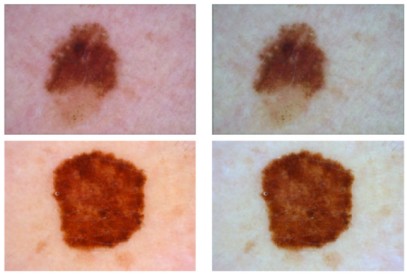

Fig.1 Skin cancer diagnostic process using deep learning. (Naili Y. T., et al., 2025)

Fig.1 Skin cancer diagnostic process using deep learning. (Naili Y. T., et al., 2025)

AI algorithms have demonstrated remarkable efficacy in significantly boosting diagnostic accuracy. In radiology, AI can meticulously analyze medical images to detect diseases like cancer at their earliest stages. Studies have shown that AI can identify tumors in mammograms and CT scans with greater precision than human radiologists. In digital pathology, AI can meticulously scrutinize histopathology images to pinpoint cancer biomarkers, thereby enabling more accurate treatment planning. This enhanced accuracy not only improves patient outcomes but also eases the workload on healthcare professionals, allowing them to focus on more complex cases.

One of the key strengths of AI in medical diagnostics is its ability to dramatically accelerate the diagnostic process. AI algorithms can rapidly process vast datasets, delivering faster results and facilitating quicker treatment decisions. This is particularly crucial in emergency situations where timely diagnosis can be life-saving. For instance, AI can analyze medical images in real-time to identify critical conditions such as stroke or heart attack, enabling immediate intervention.

AI is a vital driving force in the advancement of personalized medicine. By analyzing a patient's genetic profile and medical history, AI algorithms can tailor treatment plans to individual needs. This approach not only enhances treatment efficacy but also minimizes the risk of adverse reactions. For example, AI can predict patient responses to specific therapies, such as chemotherapy for breast cancer, thereby enabling more effective and targeted treatment planning.

Ethical and Legal Challenges in AI-Based Diagnostics

A significant challenge in AI-driven diagnostics is the issue of algorithmic bias. AI models are trained on large datasets, and if these datasets are imbalanced or skewed, the resulting algorithms may generate unfair or biased outcomes. For example, an AI model trained primarily on data from a specific demographic group may perform less accurately when applied to data from other groups, potentially leading to misdiagnoses or inequitable results. Addressing and mitigating bias in AI algorithms is crucial to ensure patient safety and maintain trust in AI-driven healthcare solutions.

Ensuring data privacy and security is of utmost importance when utilizing AI in medical diagnostics. AI systems rely on access to extensive amounts of highly sensitive medical information, which must be protected against unauthorized access and potential misuse. Data breaches can have severe consequences for patients, including identity theft and loss of privacy. Therefore, stringent data protection regulations and robust security measures are essential to safeguard patient information.

Obtaining informed consent is a fundamental aspect of patient care, but it can be particularly complex in the context of AI-based diagnostics. Patients must be fully informed about how their data will be used, the potential risks and benefits associated with AI, and the nature of the diagnostic process. However, the intricate nature of AI systems often makes it challenging for patients to fully understand these issues. Ensuring that patients provide truly informed consent is vital to maintaining trust and respecting patient autonomy.

Determining liability in the event of an AI-related diagnostic error is a complex and critical issue. If an AI system provides incorrect diagnostic recommendations, it can be difficult to determine whether the responsibility lies with the AI developer, the healthcare provider, or both. Clear legal frameworks and guidelines are necessary to define the responsibilities and liabilities of all parties involved in the use of AI in medical diagnostics.

In Europe, the regulation of AI in medical diagnostics is primarily governed by the Medical Devices Regulation (MDR) 2017/745 and the In Vitro Diagnostic Medical Devices Regulation (IVDR) 2017/746. These regulations require AI-based medical devices to undergo rigorous certification processes to ensure they meet stringent standards for safety and performance. The MDR and IVDR place a strong emphasis on transparency, data protection, and the ethical application of AI in medical devices, ensuring that these technologies are used responsibly and safely.

In the United States, the Food and Drug Administration (FDA) is responsible for approving AI-based medical devices through a risk-based framework. The FDA evaluates these devices through pathways such as the 510(k) clearance, Premarket Approval (PMA), and De Novo processes, focusing on the entire product lifecycle to ensure patient safety. The FDA's regulatory approach aims to balance innovation with the need for robust safety and efficacy standards, ensuring that AI technologies are both effective and secure for clinical use.

Other countries have also developed their own regulatory frameworks for AI in medical diagnostics. For example, in India, the Central Drugs Standard Control Organization (CDSCO) regulates AI devices through local medical device regulations, ensuring they meet high standards of safety and performance. While regulatory approaches vary by country, the common goal is to protect patient safety and promote the ethical and responsible use of AI in healthcare.

A study on the use of AI algorithms for cervical cancer detection found that initial reports of high accuracy were not replicated in clinical settings. The misdiagnosis of ambiguous cervical images led to legal liability being shared between the AI software developer and the healthcare provider. This case underscores the importance of thorough testing and validation of AI algorithms before clinical use.

Another case study examined an AI algorithm used to detect aortic stenosis through electrocardiograms. The study found that the algorithm's performance degraded when applied to a diverse patient population due to spectrum bias in the training data. This highlights the need for diverse and representative training datasets to ensure fairness and accuracy in AI diagnostics.

Regulations should require full transparency in the data collection and training processes of AI algorithms. This includes ensuring that datasets are diverse and representative of different populations to reduce bias. Additionally, independent audits of AI systems can help ensure compliance with safety standards and ethical guidelines.

A more rigorous evaluation framework is needed to improve the fairness and accuracy of AI diagnostics. This includes independent third-party assessments to reduce potential conflicts of interest and ensure that algorithms meet high performance standards before clinical use. The Total Product Lifecycle (TPLC) approach has been proposed to balance innovation with patient safety by allowing AI developers to innovate faster while maintaining stringent quality standards.

To address the challenge of informed consent, healthcare providers must ensure that patients receive clear and comprehensive information about the use of AI in their care. This includes explaining the potential risks and benefits, the nature of the diagnostic process, and how their data will be used. Patient education is crucial to ensuring that patients can make informed decisions about their healthcare.

Updating legal frameworks to clarify the responsibilities and liabilities of healthcare providers, AI developers, and other stakeholders is essential. This includes defining the legal obligations of each party in the event of diagnostic errors and ensuring that AI is used ethically and fairly. Adopting a joint liability approach or common enterprise theory can encourage all parties to prioritize patient safety and ethical use of AI.

AI has the potential to revolutionize medical diagnostics by improving accuracy, efficiency, and personalization of care. However, its use must be carefully regulated to address legal, ethical, and regulatory challenges. Ensuring transparency, fairness, and patient safety is crucial to realizing the full potential of AI in healthcare. By implementing rigorous evaluation frameworks, promoting transparency in data collection and use, and updating legal frameworks to clarify responsibilities and liabilities, we can create a safer and more equitable environment for the use of AI in medical diagnostics. Continued research and collaboration between technologists, healthcare providers, and policymakers will be essential to navigating the complexities of AI and ensuring its benefits are realized in a way that prioritizes patient well-being and fairness.

If you have related needs, please feel free to contact us for more information or product support.

Reference

This article is for research use only. Do not use in any diagnostic or therapeutic application.

Cat.No. GP-DQL-00203

Rotavirus Antigen Group A and Adenovirus Antigen Rapid Test Kit (Colloidal Gold)

Cat.No. GP-DQL-00206

Adenovirus Antigen Rapid Test Kit (Colloidal Gold), Card Style

Cat.No. GP-DQL-00207

Adenovirus Antigen Rapid Test Kit (Colloidal Gold), Strip Style

Cat.No. GP-DQL-00211

Rotavirus Antigen Group A Rapid Test Kit (Colloidal Gold), Card Type

Cat.No. GP-DQL-00212

Rotavirus Antigen Group A Rapid Test Kit (Colloidal Gold), Card Type

Cat.No. IP-00189

Influenza A Rapid Assay Kit

Cat.No. GH-DQL-00200

Follicle-stimulating Hormone Rapid Test Kit (Colloidal Gold)

Cat.No. GH-DQL-00201

Insulin-like Growth Factor Binding Protein 1 Rapid Test Kit (Colloidal Gold)

Cat.No. GH-DQL-00202

Luteinizing Hormone Rapid Test Kit (Colloidal Gold)

Cat.No. GH-DQL-00208

Follicle-stimulating Hormone Rapid Test Kit (Colloidal Gold), Strip Style

Cat.No. GH-DQL-00209

Insulin-like Growth Factor Binding Protein 1 Rapid Test Kit(Colloidal Gold), Strip Style

Cat.No. GH-DQL-00210

Luteinizing Hormone Rapid Test Kit (Colloidal Gold), Strip Style

Cat.No. IH-HYW-0001

hCG Pregnancy Test Strip

Cat.No. IH-HYW-0002

hCG Pregnancy Test Cassette

Cat.No. IH-HYW-0003

hCG Pregnancy Test Midstream

Cat.No. GD-QCY-0001

Cocaine (COC) Rapid Test Kit

Cat.No. GD-QCY-0002

Marijuana (THC) Rapid Test Kit

Cat.No. GD-QCY-0003

Morphine (MOP300) Rapid Test Kit

Cat.No. GD-QCY-0004

Methamphetamine (MET) Rapid Test Kit

Cat.No. GD-QCY-0005

Methylenedioxymethamphetamine ecstasy (MDMA) Rapid Test Kit

Cat.No. GD-QCY-0006

Amphetamine (AMP) Rapid Test Kit

Cat.No. GD-QCY-0007

Barbiturates (BAR) Rapid Test Kit

Cat.No. GD-QCY-0008

Benzodiazepines (BZO) Rapid Test Kit

Cat.No. GD-QCY-0009

Methadone (MTD) Rapid Test Kit

Cat.No. GD-QCY-0011

Opiate (OPI) Rapid Test Kit

Cat.No. ID-HYW-0002

Multi-Drug Test L-Cup, (5-16 Para)

Cat.No. ID-HYW-0005

Multi-Drug Rapid Test (Dipcard & Cup) with Fentanyl

Cat.No. ID-HYW-0006

Multi-Drug Rapid Test (Dipcard & Cup) without Fentanyl

Cat.No. ID-HYW-0007

Multi-Drug 2~14 Drugs Rapid Test (Dipstick & Dipcard & Cup)

Cat.No. ID-HYW-0008

Fentanyl (FYL) Rapid Test (For Prescription Use)

Cat.No. ID-HYW-0009

Fentanyl Urine Test Cassette (CLIA Waived)

Cat.No. ID-HYW-0010

Fentanyl Urine Test Cassette (Home Use)

|

There is no product in your cart. |