- Home

- Resource

- Explore & Learn

- Evaluating the Clinical Performance of In Vitro Diagnostic Tests: A Comprehensive Guide

- Home

- IVD

- By Technology Types

- By Diseases Types

- By Product Types

- Research

- Resource

- Distributors

- Company

In the rapidly evolving realm of contemporary healthcare, in vitro diagnostic (IVD) tests have emerged as indispensable tools for detecting, managing, and preventing diseases. Spanning from straightforward blood glucose tests to intricate genetic analyses, these tests supply vital data that shapes clinical decisions. Nevertheless, the clinical performance of these tests must undergo stringent evaluation to guarantee their reliability and validity. This in-depth guide explores the intricate process of evaluating IVD tests, highlighting the significance of clinical performance metrics, study design, and regulatory factors.

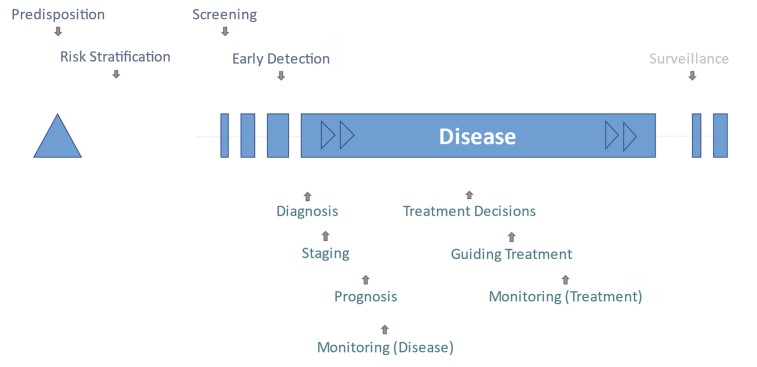

Fig.1 Schema of test purposes during the course of disease. (Lord S. J., et al., 2025)

Fig.1 Schema of test purposes during the course of disease. (Lord S. J., et al., 2025)

The clinical performance evaluation of IVD tests is crucial to ensure they meet the high standards required for clinical use. Regulatory authorities like the U.S. Food and Drug Administration (FDA) and the European Medicines Agency (EMA) demand that IVD tests exhibit robust clinical performance before approval. This evaluation process assesses the test's ability to accurately detect or predict diseases or conditions, directly influencing patient outcomes.

For example, a study published in the Journal of the American College of Cardiology emphasized the vital role of high-sensitivity cardiac troponin (hs-cTn) assays in diagnosing myocardial infarction. The study showed that these assays, when evaluated for clinical performance, could significantly enhance diagnostic accuracy and patient management. This highlights the necessity for rigorous evaluation protocols to ensure that IVD tests provide reliable and actionable results.

Clinical performance measures are the quantitative metrics used to assess the accuracy and reliability of IVD tests. Sensitivity and specificity are the primary measures, indicating the test's ability to correctly identify true positives and true negatives, respectively. These measures are crucial for evaluating diagnostic and screening tests.

Sensitivity and specificity are fundamental metrics for assessing diagnostic accuracy. Sensitivity refers to the proportion of true positives among individuals who have the condition, while specificity indicates the proportion of true negatives among those who do not. For instance, a study evaluating a new diagnostic test for a rare disease reported a sensitivity of 95% and a specificity of 90%. This means the test accurately identified 95% of the individuals with the disease and 90% of those without it. These metrics are crucial for understanding the test’s reliability and its potential impact on clinical decision-making.

The positive predictive value (PPV) and negative predictive value (NPV) are pivotal measures that offer critical insights into the clinical utility of IVD test results. The PPV reflects the probability that a positive test result truly indicates the presence of a disease, while the NPV reflects the probability that a negative test result accurately signifies the absence of a disease. These values are especially important in populations with varying disease prevalence rates. For example, in a low-prevalence setting, a test with high sensitivity but low specificity may produce a high number of false positives, which can significantly affect its PPV.

The design of clinical performance studies is crucial for obtaining reliable and valid results. The choice of study design depends on the test purpose and the nature of the target condition. Common study designs include cross-sectional studies, cohort studies, and randomized controlled trials.

Cross-sectional studies are well-suited for evaluating diagnostic and screening tests. In this study design, the index test and the reference standard are conducted concurrently on the same population. This approach enables a direct comparison of the test results with the reference standard, providing immediate insights into the test’s accuracy. For instance, a cross-sectional study assessing a new screening test for a common cancer reported a sensitivity of 92% and a specificity of 88%, which were compared with the gold standard diagnostic procedure to offer a clear assessment of the test’s performance.

Cohort studies are appropriate for assessing tests that predict future events, such as risk stratification and prognosis. In these studies, the index test is performed at baseline, and the study population is followed over time to observe the occurrence of the target condition. For instance, a cohort study evaluating a biomarker for predicting cardiovascular risk reported that individuals with elevated levels of the biomarker had a significantly higher risk of developing cardiovascular disease over a 10-year follow-up period. This design allows for the assessment of the test's predictive accuracy over time.

Randomized controlled trials (RCTs) are the gold standard for evaluating tests that predict treatment benefit. In these studies, participants receive the index test and are randomized to either the targeted treatment or standard care. The outcomes are then compared between the two groups to determine the test's ability to identify individuals who will benefit from the targeted treatment. For example, an RCT evaluating a genetic test for predicting response to a specific cancer therapy demonstrated that patients with a positive test result had a significantly better response to the targeted therapy compared to those receiving standard treatment. This design provides direct evidence of the test's clinical utility in guiding treatment decisions.

Regulatory bodies play a crucial role in ensuring the safety and efficacy of IVD tests. The FDA and EMA have established guidelines and requirements for clinical performance evaluation. These guidelines mandate that the quality of clinical evidence must be proportional to the risk associated with the test. For high-risk tests, such as those used in critical care settings, stringent evidence is required to demonstrate their clinical performance and safety.

The need for harmonized guidance across different stakeholders is essential for consistent evaluation and approval of IVD tests. The European Federation of Clinical Chemistry and Laboratory Medicine (EFLM) has developed a comprehensive checklist for formulating research objectives and study designs to evaluate clinical performance. This checklist includes key elements such as test purpose, target condition, clinical performance measures, and study design types. By following this checklist, stakeholders can ensure that their evaluation processes are aligned with regulatory requirements and best practices.

Evaluating the clinical performance of IVD tests presents several practical challenges. One significant issue is the application of clinical performance data from one manufacturer's assay to other assays measuring the same biomarker. Standardization and harmonization of assays are crucial to ensure consistent results across different platforms. Additionally, the regulatory timeline for submitting clinical performance evidence can be demanding, particularly for tests already established in routine practice.

To address these challenges, future efforts should focus on developing more detailed guidance on study design for each common type of test purpose. Initiatives to link laboratory databases with clinical records and adopt standardized structured reporting of test results in electronic health records (EHRs) can enhance the generation of real-world data for post-market surveillance. For example, a recent study demonstrated the feasibility of integrating laboratory data with EHRs to monitor the performance of IVD tests in real-time. This approach can provide valuable insights into the test's performance in diverse clinical settings and patient populations.

The clinical performance evaluation of IVD tests is a complex but essential process that ensures these tests meet the high standards required for clinical use. By understanding and applying the principles of clinical performance measures, study design, and regulatory considerations, stakeholders can ensure that IVD tests deliver reliable and actionable results. As the field of IVD continues to evolve, ongoing collaboration and innovation will be crucial to address emerging challenges and harness the full potential of these diagnostic tools.

If you have related needs, please feel free to contact us for more information or product support.

Reference

This article is for research use only. Do not use in any diagnostic or therapeutic application.

Cat.No. GP-DQL-00203

Rotavirus Antigen Group A and Adenovirus Antigen Rapid Test Kit (Colloidal Gold)

Cat.No. GP-DQL-00206

Adenovirus Antigen Rapid Test Kit (Colloidal Gold), Card Style

Cat.No. GP-DQL-00207

Adenovirus Antigen Rapid Test Kit (Colloidal Gold), Strip Style

Cat.No. GP-DQL-00211

Rotavirus Antigen Group A Rapid Test Kit (Colloidal Gold), Card Type

Cat.No. GP-DQL-00212

Rotavirus Antigen Group A Rapid Test Kit (Colloidal Gold), Card Type

Cat.No. IP-00189

Influenza A Rapid Assay Kit

Cat.No. GH-DQL-00200

Follicle-stimulating Hormone Rapid Test Kit (Colloidal Gold)

Cat.No. GH-DQL-00201

Insulin-like Growth Factor Binding Protein 1 Rapid Test Kit (Colloidal Gold)

Cat.No. GH-DQL-00202

Luteinizing Hormone Rapid Test Kit (Colloidal Gold)

Cat.No. GH-DQL-00208

Follicle-stimulating Hormone Rapid Test Kit (Colloidal Gold), Strip Style

Cat.No. GH-DQL-00209

Insulin-like Growth Factor Binding Protein 1 Rapid Test Kit(Colloidal Gold), Strip Style

Cat.No. GH-DQL-00210

Luteinizing Hormone Rapid Test Kit (Colloidal Gold), Strip Style

Cat.No. IH-HYW-0001

hCG Pregnancy Test Strip

Cat.No. IH-HYW-0002

hCG Pregnancy Test Cassette

Cat.No. IH-HYW-0003

hCG Pregnancy Test Midstream

Cat.No. GD-QCY-0001

Cocaine (COC) Rapid Test Kit

Cat.No. GD-QCY-0002

Marijuana (THC) Rapid Test Kit

Cat.No. GD-QCY-0003

Morphine (MOP300) Rapid Test Kit

Cat.No. GD-QCY-0004

Methamphetamine (MET) Rapid Test Kit

Cat.No. GD-QCY-0005

Methylenedioxymethamphetamine ecstasy (MDMA) Rapid Test Kit

Cat.No. GD-QCY-0006

Amphetamine (AMP) Rapid Test Kit

Cat.No. GD-QCY-0007

Barbiturates (BAR) Rapid Test Kit

Cat.No. GD-QCY-0008

Benzodiazepines (BZO) Rapid Test Kit

Cat.No. GD-QCY-0009

Methadone (MTD) Rapid Test Kit

Cat.No. GD-QCY-0011

Opiate (OPI) Rapid Test Kit

Cat.No. ID-HYW-0002

Multi-Drug Test L-Cup, (5-16 Para)

Cat.No. ID-HYW-0005

Multi-Drug Rapid Test (Dipcard & Cup) with Fentanyl

Cat.No. ID-HYW-0006

Multi-Drug Rapid Test (Dipcard & Cup) without Fentanyl

Cat.No. ID-HYW-0007

Multi-Drug 2~14 Drugs Rapid Test (Dipstick & Dipcard & Cup)

Cat.No. ID-HYW-0008

Fentanyl (FYL) Rapid Test (For Prescription Use)

Cat.No. ID-HYW-0009

Fentanyl Urine Test Cassette (CLIA Waived)

Cat.No. ID-HYW-0010

Fentanyl Urine Test Cassette (Home Use)

|

There is no product in your cart. |